Human - Robot Interaction

“Hey Siri, how can you understand me?”

This is a voice assistant. Photo by Ben Kolde on Unsplash.

Voice assistants, such as Siri, have risen in popularity over the years. Juniper Research estimated over 3 billion voice assistants to be in use last February! To suit to the needs of their users, voice assistants have to keep improving. Last month, the newest Alexa update came out. It is now able to ask you a question, instead of only ever answering. Before, voice assistants only asked “did you mean…” or simple said “I don’t understand you”. Now, they might ask for clarification on a certain word and keep in mind the context. Have you ever wondered how this works?

Some voice assistants, like Siri, are on your phone, but others are its own device, as shown in the picture above. They exist to make your life easier and help you do small tasks. For example, they can quickly set a timer or answer a question, such as “what is the length of ‘Frozen II’?”.

However, having a full-blown conversation is not yet possible. Part of the reason, is how humans talk, which is discussed later in the article. Another reason is emotion. Dr. Arjan van Hessen, who is an expert in the field of linguistics and speech recognition and works at Utrecht University, thinks the biggest challenge in developing speech recognition, is having the devices detect and react appropriately to emotions. This is, according to him, a reason for big companies not to use speech recognition in their call centers. Communicating through speech recognition is less personal, which might not be good for companies advertising a personal approach. Another reason not to use speech recognition, is if the call is very personal. For example, when you call an emergency number, you would not want your emotions to be ignored.

However, in most cases, emotions do not need to be accounted for. In simple questions, for example. This article is written about those cases. How do voice assistants know what is being said then?

To use Siri, you have to properly calibrate it by saying “Hey, Siri” multiple times. It then knows what it sounds like for you to say that phrase. After this, your phone keeps listening to all kinds of sounds. If it hears a sound that very closely resembles your version of “hey, Siri”, it wakes up and you can ask it anything! Even though only “Hey Siri” was calibrated, it can then recognise you in almost all the other words you say. Even if you talk very fast or if you have a very thick accent, which might make you sound different from the general public. How can Siri still know what you say?

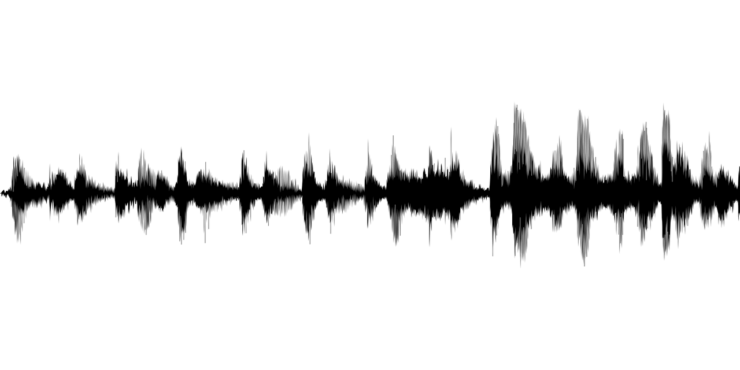

Sound as registered by your phone. Image by Gordon Johnson from Pixabay.

The answer to that question lies in the recording of your voice. When recorded by your phone, your voice looks like the image above. The audio is then cut into very small pieces of just one letter long. These pieces are then compared to what every letter sounds like on average. Through that, a likelihood of which letter it could be, is computed. Siri is kind of smart, because it uses the previous letters to determine how likely it is for the next letter to be something. For example, if your phone thinks you have said “wha” so far, it is more likely that the next letter is “t”, to finish the word “what”, than it is to be “q”, since “whaq” is not a word. Using this method, Siri now hears you correctly for 90% of the time!

Just knowing the words that you say, however, is not enough to answer you. Siri also needs to know which words are important. Dr. van Hessen stated that humans use a lot of filler words when talking to each other and often respond with “yes” or “uh-huh” to state they’ve understood the other. We know those words aren’t important, but computers do not know that. They try to give meaning to everything. However, in most cases, they do not to focus on those words. Why not?

In order to be able to understand what is important in a sentence, voice assistants need to be able to deconstruct a sentence into pieces. In school, you have learned about different types of words, such as nouns, verbs, and adjectives. Siri also knows these types of words. According to their placement in a sentence, Siri can figure out what the word means. In “the rose smells nice”, the word “rose” is a noun and therefore refers to the flower. However, in “the sun rose”, it is a verb and therefore the past tense of “rise”.

Another way Siri uses sentence structures, is to indentify the type of question. You can ask questions like “where’s the nearest movie theater”. Siri knows this is a “where”-question, so it searches for a location. It also knows you want to find the noun “movie theater” and recognizes that you want its location to be near you. Siri searches for answers based on the key words in your question.

Siri is not the only thing that uses voice recognition. Other voice assistants use these methods as well, and even the gaming industry has found a use for it. The app SpokeIt, which is in development right now, is designed to provide speech therapy in a fun way. However, the games can also be less educational. In a horror ghost hunting game that has been taking the gaming community by storm for the past two weeks, the “ghost” can hear their own name and the questions you ask it, and respond to them accordingly.

So although understanding emotions and meaning behind words provides an amazing challenge, speech recognition is very accurate and is used in many ways already. The technology is being advanced further and further and I am excited to see where we go from here!