How small can we go?

Computing realistic images

You probably recognize the situation: a photo is blurred because your phone was out of focus, or because you zoomed in too much. Scientists struggle with a similar problem. If they use a microscope to zoom in on something very small and make a picture, the image is always blurry. Even with the best microscope there is nothing that can be done: the photo is distorted and inaccurate. Physicist Kevin Namink tries to solve this problem together with his colleagues at SVI (Scientific Volumetric Imaging). He explains where these distortions come from and how computers can help us getting more realistic images of the microscopic world. Physics, computer science and math all come together when looking behind the curtains of microscopic images.

Figure 1: Photo of mitosis. Made by dr. Alexia Ferrand, Imaging Core Facility, BioZentrum, University of Basel.

In figure 1 you see an example of a microscopy image. A microbiologist recognizes mitosis, but the details are blurred. Precisely those details of the image are interesting to scientists. Kevin Namink has done a lot of research on microscopes during his studies in physics, and he recognizes the problem of blurry images. He explains: “A beam of light is not a straight line, but has a width. Because you enlarge the size of the image, this width comes into the picture. The smallest source of light spreads out over multiple pixels.” So around each pixel forms a ‘pixel cloud’ of light that activates not only the pixel but also its neighbours. If we take a picture of a black dot, this effect creates a grey blur around the dot (see figure 2). This not only creates a distorted image, but the blur also disguises some details.

Figure 2: black dot

A realistic picture

Physicists are very good at describing this pixel cloud. Kevin: “We know that neighbouring pixels contain information about each other. In that sense, we know more than is apparent from the photo.” Physicists like Kevin use that knowledge to recreate the original image. This process is called deconvolution. You can imagine the staggering amount of calculations: for each of thousands of pixels you calculate the influence of its neighbours. That is why SVI makes computer software that lets the computer do the calculations. The result can be seen in figure 3. Besides deconvolution, their software also has other functionalities, like colouring different parts of an image to distinguish and separate them.

Figure 3: figure 1 processed by Huygens software.

For this kind of image processing, it is vital that the end results are as similar to reality as possible. SVI’s software is used by microbiologists that research different properties of cells, for instance. You don’t want your software to create details that were not part of the original image, just as a snapchat filter can for instance add a few wrinkles to a selfie. To prevent this from happening, they base each algorithm on scientific literature.

Other factors, such as the colour of the microscope light and the type of microscope used, are also taken into account in the calculations. A different wavelength of the light or another photographic angle both influence the shape of the pixel cloud. These days, many new microscopy techniques are being developed. “Images created with these techniques require different processing techniques,” stresses Kevin. The physicist’s challenge is to support these new techniques with new software.

Faster image processing

A different challenge is more mathematical in nature. The files microbiologists work with, are becoming increasingly massive. One microscopy image can take up as much computer space as 10,000 selfies. Sometimes it takes a whole night to process one image. This is a problem when you’re working under pressure or if you need to process multiple images. In the past, this could be solved by building computers with more power. “But nowadays, two times as much computing power also costs twice as much money,” Kevin notes. That is why now, a lot of effort is put into the parallelization of existing algorithms. “Using parallel processes as often as possible is now the best way of increasing computing speed.”

During my Master’s in mathematics, I contributed to the parallelization of one of these algorithms. The idea is that a computer is no longer following a step-by-step protocol, but computing different tasks simultaneously. Some computers, for example, have four processor cores, calculators, if you will. If you divide an image into four and distribute the pieces among the four cores, the algorithm will be four times as fast. Currently, we are even using graphics cards for this, because they consist not of four but thousands of little calculators. This could speed up the process a lot.

There are, however, disadvantages of using graphics cards. Each one of these little calculators is less powerful than a processor core. For my project at SVI this meant we had to use a much simpler algorithm to let the graphics card work on it. This shows that we have to change existing algorithms before we can use graphics cards for fast parallel processes.

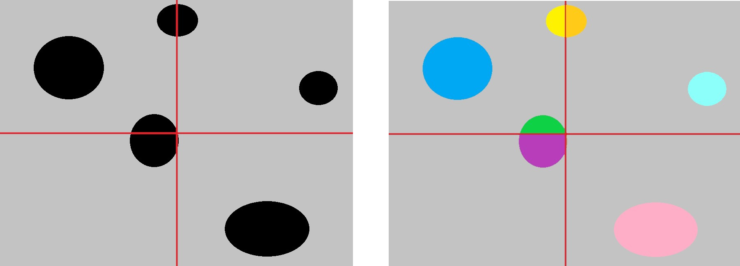

To boot, it is not at all easy to recombine the thousands of processed pieces into one big picture. How does a computer decide that the green and purple parts below form one shape together and need to get the same colour? For some algorithms and images, you even need information from the entire image to process a small part. We don’t know yet whether it is always possible to divide images in this way and stitch them back together.

Figure 4: a parallel algorithm to colour dots with different colours.

Now you see that behind every microscopy image, there is much more hidden knowledge than just biology. In every step, a different area of expertise pops up. Physics is useful for taking photographs and makes sure we see a reflection of reality. Additionally, we use mathematical insights to increase efficiency. These kinds of technical experts are wanted for almost any sector and whatever you want to do depends entirely on your own interests.

Bronnen:

NVIDIA: the difference between processor cores and graphics cards

David Rotman: decreased increase in computer power